Introduction

I recently joined Stephen Foskett from Gestalt IT on a video podcast to discuss “Improving AI with Transfer Learning”. This podcast is part of a series of podcasts on “Utilizing AI”. The series focuses on Artificial Intelligence (AI) and Machine Learning (ML) practical applications in the modern enterprise data center and cloud infrastructure.

The full description and the link to the podcast can be found here. For a direct link to the YouTube video, see below.

A primer on Transfer Learning

Transfer Learning uses accumulated knowledge from pre-trained models and transfers that knowledge to a new model. The approach opens the door to solve more complex problems. The goal is to improve performance and reduce the overall time to train a new model.

Transfer Learning is becoming a key driver for ML, and its economic value is snowballing. Enterprises can benefit from quickly extending existing pre-trained models with improved performance. On the other hand, enterprises with little experience can benefit from bootstrapping their ML projects with Transfer Learning.

For more details, please refer to my previously published blog dedicated to Transfer Learning.

Discussion topics

Some of the topics and questions address in the podcast are:

-

- What is Transfer Learning?

- How is Transfer Learning different from building models without Transfer Learning?

- Is Transfer Learning the model for Enterprise AI moving forward?

- How does it reduce the heavy lifting when building AI models?

- Does the use of Transfer Learning mean re-writing all my code?

- Transfer Learning and conversational AI

- Why does Transfer Learning help accelerate the democratization of AI?

- Will AI ever get us a model that is 100% effective (closing the gap)?

- Why is it important for AI applications to continue to learn?

- ML is about pattern matching

Conversational AI – Turing test

At the end of the podcast, I was asked the following question “How long will it take for us to have a verbal conversational AI that can pass the Turing test and fool an average person into thinking that it is speaking with another person.” I thought it was an interesting question considering this is my area of expertise. I did provide an answer in the podcast but wanted to elaborate on my answer in this blog.

As defined by Alan Turing in 1950, the Turing test is a test of a machine’s ability to exhibit intelligent behavior equivalent to or indistinguishable from a human.

In my opinion, it would take up to 4 years maximum for a commercial system to fool me into believing I was speaking to another person. I previously worked in the speech business and it is easy for me to spot inconsistencies and recognize AI at work. It might take less time for the average person to fool as today’s technology is already quite convincing.

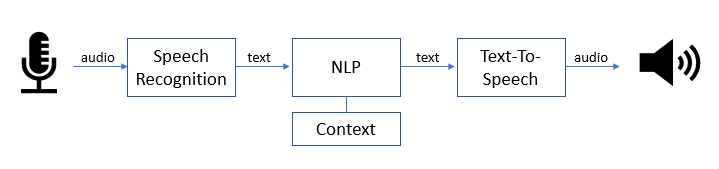

In conversational AI, the two main components that will make or break the Turing test are Text-To-Speech and Speech-To-Text.

Text-To-Speech (TTS)

The TTS engine is responsible for generating audio for a given text. The challenge is that the voice needs to be crisp, fluent without sounding robotic to pass the test. A TTS engine concatenates bits of sounds into a streaming voice while applying the proper intonation. Selecting the right sound bits and seamlessly transitioning from one sound bit to another to create a fluent voice is essential to pass the test. The weakest link always has been the transition of two sounds bits. Just concatenating the two together sounds rough, unnatural and a clear giveaway that it was machine-generated. To obtain a natural human flow, there is a need for the sound bits to melt together.

The newer generation of TTS engines uses real-time GPU processing driven by AI to achieve a natural human voice. The results are near perfect and almost impossible to identify the sound as being generated by AI.

Speech-To-Text (aka Speech Recognition)

Speech Recognition presents more challenges due to its technical complexity and variety of sources. Just imagine how difficult it is to collect the data and build a reliable engine that would work reasonably well for everybody that speaks a particular language. However, it is the use of Natural Language Understanding (NLU) with Natural Language Processing (NLP) that enables the conversational aspect of AI. It’s the ability to decompose a request from a person and put it in a format that can be processed and analyzed by AI and then re-assembled for human consumption. NLU is not limited to Speech Recognition and is applied anywhere where text processing is essential. Many organizations have dedicated research groups to advance and push forward NLU innovation. For example, Google uses NLU to improve its Search platform while Facebook uses NLU to create connections between users.

For more information on NLU and NLP, see my recent blog on “NLP: meaningful advancements with BERT”.

Figure 1 shows the different steps from recording some speech, analyzing and processing the recognized text with NLP (mixed in with relevant context) and finally returning an answer using TTS.

Figure 1- from Speech Recognition to Text–to-Speech

Machine Translation

The holy grail of Conversational AI is the ability to engage with AI in multiple languages. Machine translation technology has not advanced enough to provide consistent and reliable translation across many languages. Most of the machine translation initiatives today are translations between two fixed languages. It will take many more years of research before we will have efficient machine translation for multiple languages.

Summary

As individuals, we never stop learning to increase our knowledge base and thrive for innovation. The same is true for AI. The creation of an AI model is a starting point, not an end goal. The strength of AI lies in quickly adapting to a changing environment and learning from the past. AI needs a process that assures that the model reflects the environment’s changes as close as possible (closing the gap) at all times. Transfer Learning is a process that can accelerate the learning process and provides opportunities for enterprises to bootstrap and advance AI.

I want to thank Gestalt IT for the opportunity to have me as a co-host on their podcast.