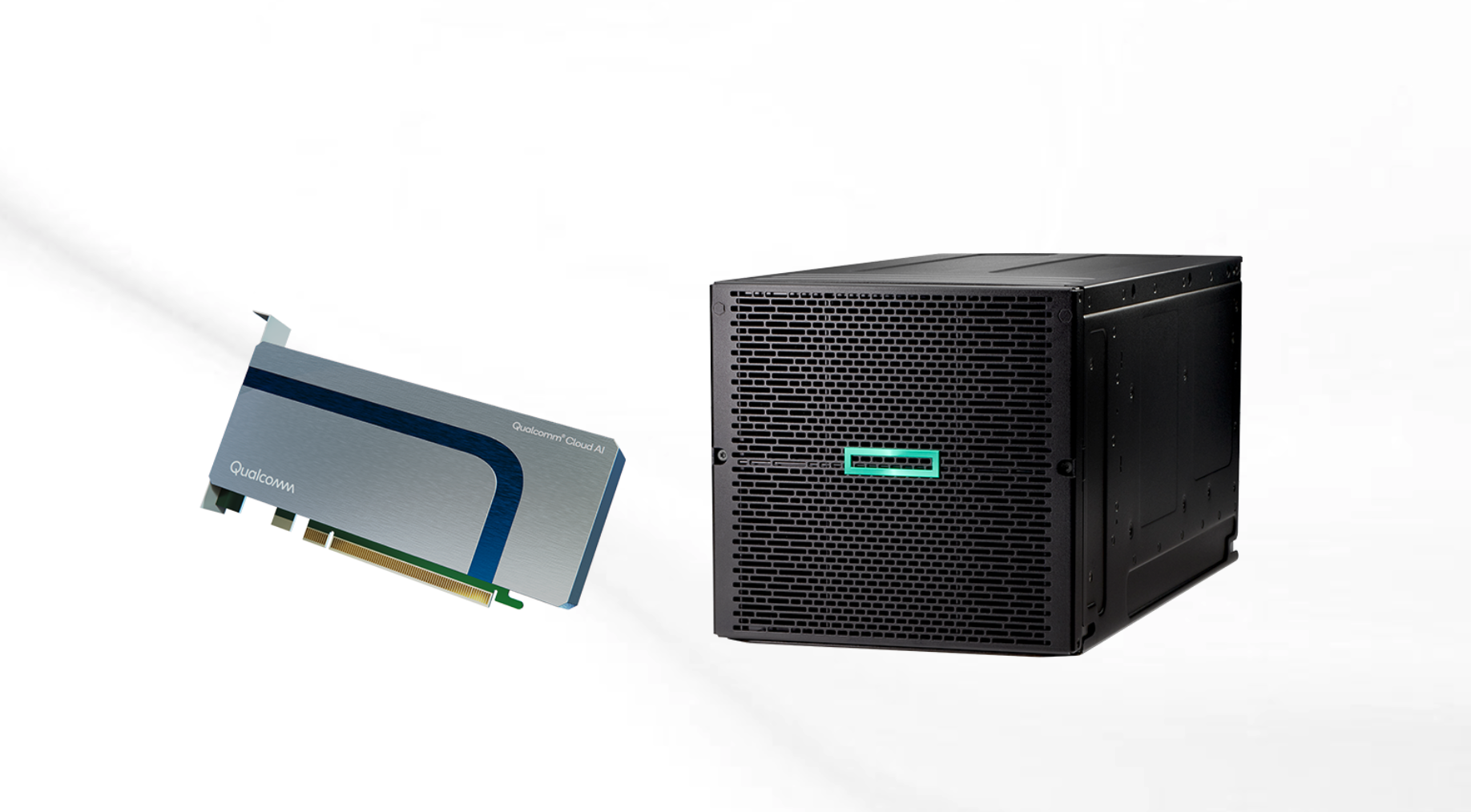

HPE and Qualcomm are collaborating to deliver high performance and power efficient solutions for customers deploying ML/DL models at the edge.

AI Inference workloads are often larger in scale than training workloads and frequently need to meet specialized requirements such as low-latency and high-throughput to enable real-time results. That’s why the best model deployment infrastructure often differs from what’s needed for development and training. In addition, customers deploying AI inference workloads at the edge often require hardware that is not only high performant and reliable but compact in size, and energy efficient.

To that end, HPE has collaborated with Qualcomm Technologies to bring customers an AI Inference solution that delivers insights with power-performant infrastructure that can also meet the unique challenges presented by edge deployments.

The HPE Edgeline EL8000 integrated with Qualcomm Cloud AI 100 delivered more than 75,000 inferences/second on computer vision workloads and 2,800 inferences/second on NLP (natural language processing) workloads. These results were delivered on HPE Edgeline, which is optimized for 0-55C operating environments while consuming less than 500 Watts. HPE also delivered leading results for high-performance power efficiency in this category. See www.com/ai/qualcomm-ai100 URL for details on these recent ML Perf results

HPE Edgeline EL8000 systems with Qualcomm Cloud AI 100 accelerators are available now where high-performance, power efficiency, and server ruggedization are needed.

Learn more at HPE Edgeline inference platform with Qualcomm Cloud AI 100 accelerators